What is cuDNN? Difference between cuDNN and CUDA

cuDNN plays a key role in optimizing the performance of training and inference operations.

Unlike general-purpose CUDA, which provides a parallel computing platform and programming model, cuDNN is specially designed to expedite deep learning tasks.

By the end of this blog post you'll know what cuDNN is. We'll also highlight the key differences between cuDNN and CUDA, and provide real-life applications to illustrate how these technologies are transforming various industries.

What is CuDNN?

cuDNN, which stands for CUDA Deep Neural Network library, is a special tool created by NVIDIA to make deep learning tasks faster and more efficient.

You can think of cuDNN as a powerful assistant that helps your computer's GPU handle the heavy lifting involved in training and running neural networks.

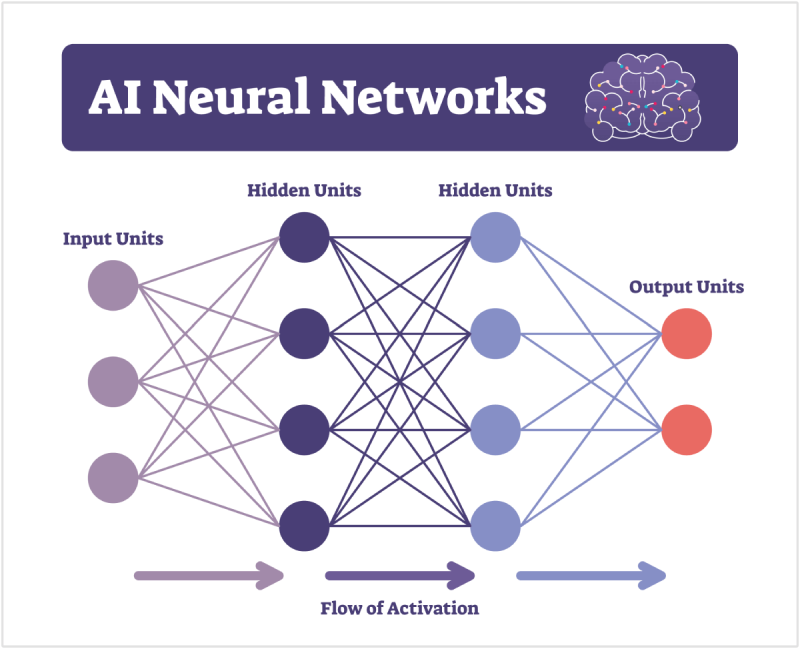

Neural networks are a type of artificial intelligence (AI) that mimic how our brains work, enabling computers to recognize patterns and make decisions.

Neural networks require a lot of calculations, which can be very time-consuming. cuDNN speeds up these calculations, making it possible to train neural networks much faster than using just a regular CPU.

What is the Difference between cuDNN and CUDA?

CUDA, which stands for Compute Unified Device Architecture, is a parallel computing platform and programming model created by NVIDIA.

It allows developers to use NVIDIA GPUs to perform general-purpose computing tasks, not just graphics processing.

Essentially, CUDA lets your computer's GPU do more than just render images – it can also perform complex calculations very quickly.

cuDNN, on the other hand, is a specialized library within the CUDA framework specifically designed to speed up deep learning operations.

While CUDA can handle many different types of tasks, cuDNN focuses solely on neural networks. It provides highly optimized routines for common deep learning operations.

There are also two major differences between cuDNN and CUDA, namely:

Level of Abstraction

- CUDA: Working with CUDA often means writing more detailed and lower-level code. You need to manage a lot of the specifics of how tasks are divided and run on the GPU.

- cuDNN: CuDNN abstracts much of this complexity away. It provides higher-level functions that are already optimized for neural network operations, so you don't need to worry about the lower-level details. This makes it easier and faster to implement deep learning models.

Ease of Use

- CUDA: Because it’s more general and lower-level, using CUDA can be more complex and time-consuming. It requires a good understanding of parallel computing and GPU architecture.

- cuDNN: cuDNN is designed to be easy to use for developers working on deep learning. It simplifies many tasks and includes highly efficient implementations of common neural network operations like convolutions, activation functions, and normalization.

How is cuDNN Used in the Real World?

cuDNN is a powerful tool that makes deep learning applications faster and more efficient, enabling a wide range of real-world applications.

Here are some examples of how cuDNN is used in various fields:

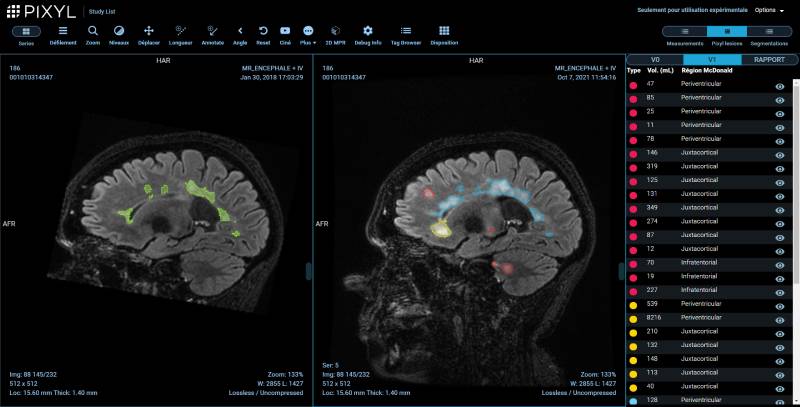

1. Medical Imaging

cuDNN accelerates the processing of medical images, helping doctors detect diseases like cancer.

For instance, it enables faster and more accurate analysis of MRI and CT scans, leading to quicker diagnoses and better patient outcomes.

Source: Wiley

2. Self-Driving Cars

In the automotive industry, cuDNN is used to train neural networks that power self-driving cars.

These networks analyze vast amounts of sensor data in real-time to make decisions on navigation, obstacle detection, and pedestrian recognition, making autonomous driving safer and more reliable.

3. Fraud Detection

Banks and financial institutions use cuDNN to build models that detect fraudulent transactions. By analyzing patterns in transaction data, these models can quickly identify and flag suspicious activities, helping prevent financial fraud and protect customers.

4. Video Streaming

Leading streaming services use cuDNN to enhance their recommendation systems. By quickly analyzing user preferences and viewing histories, these systems provide personalized content suggestions, improving user experience and engagement.

5. Customer Insights

Retailers leverage cuDNN to analyze customer behavior and predict trends.

This helps them optimize inventory management, personalize marketing campaigns, and improve customer satisfaction by offering products that match consumer preferences.

6. Crop Monitoring

In agriculture, cuDNN-powered drones and sensors analyze crop health and soil conditions.

This real-time data helps farmers make informed decisions about irrigation, fertilization, and pest control, leading to higher crop yields and sustainable farming practices.

7. Surveillance Systems

Modern surveillance systems use cuDNN to enhance video analysis and object recognition. This allows for quicker detection of potential threats and improved security monitoring in public spaces, airports, and other critical areas.

cuDNN Key Features

Great, now that you know what cuDNN is and the major differences between this technology and CUDA, let's take a closer look at some of the key features of cuDNN.

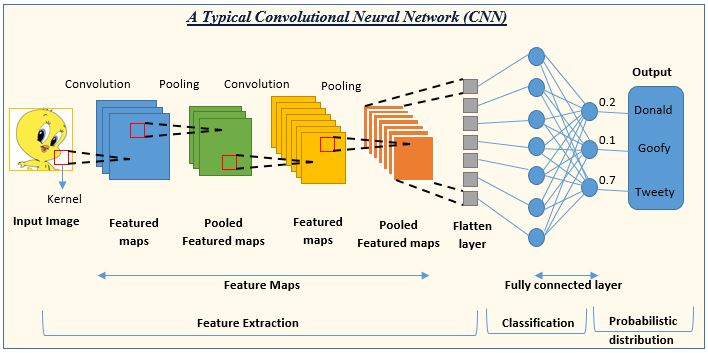

Convolution Operations

A convolution operation is a mathematical process used primarily in the field of image and signal processing.

In simple terms, it involves taking small matrices called kernels (or filters) and sliding them over input data (like an image) to produce feature maps.

These feature maps help neural networks detect and recognize patterns, such as edges, textures, and shapes, which are crucial for tasks like image classification, object detection, and more.

cuDNN enhances this process by providing highly optimized routines for convolution operations, making them faster and more efficient.

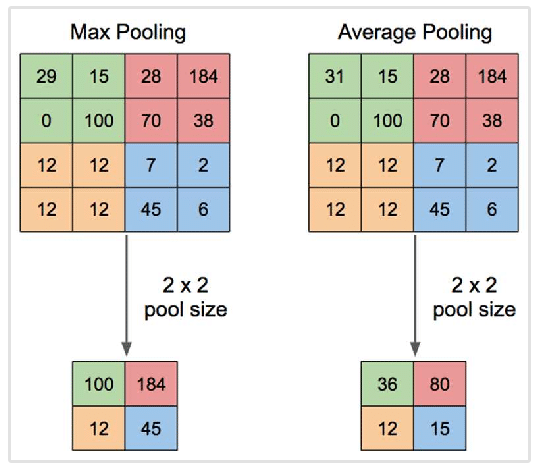

Pooling Operations

Pooling operations are used in deep learning to reduce the size of the data while preserving important features.

Imagine you have a high-resolution image, but you want to simplify it without losing the essential parts.

Pooling helps with this by summarizing the information in small regions of the image.

There are different types of pooling, with the most common ones being max pooling and average pooling.

cuDNN supports pooling operations by using NVIDIA GPUs to perform these tasks much faster than CPUs, thereby speeding up the training process of neural networks.

It offers flexibility by supporting various types of pooling and different window sizes and strides, giving developers control over how data is reduced.

Additionally, cuDNN simplifies the implementation of pooling operations with straightforward functions, reducing the time and effort needed for coding.

It also integrates seamlessly with popular deep learning frameworks like TensorFlow, PyTorch, and Caffe, making it easy to add efficient pooling operations to existing projects.

Normalization Operations

Normalization operations help standardize the input data, improving the training efficiency and stability of neural networks.

By adjusting and scaling the data, normalization ensures that the inputs to each layer of the neural network have a consistent distribution, which can lead to faster convergence and better performance.

cuDNN provides optimized functions for performing these normalization operations efficiently.

Just like with convolution and pooling, cuDNN offers pre-built tools for scaling and reshaping data.

Programmers can specify the normalization technique they want to use, and cuDNN handles the underlying calculations, ensuring the data is prepared for smooth and efficient training within the deep learning model.

Recurrent Neural Networks

Recurrent Neural Networks (RNNs) are a type of artificial neural network designed to recognize patterns in sequences of data, such as time series, speech, text, or any data where the order of the inputs is important.

Unlike traditional feedforward neural networks, RNNs have connections that loop back on themselves, allowing information to be passed from one step of the sequence to the next.

This ability to maintain a "memory" of previous inputs makes RNNs particularly powerful for tasks involving sequential data.

cuDNN offers highly optimized routines for RNN operations, greatly accelerating both training and inference. This allows you to develop and deploy RNN models faster, making real-time applications more feasible.

Career Opportunities

Understanding what cuDNN is and being proficient in using it is important for a career in AI or machine learning.

As deep learning and AI technologies continue to evolve, the demand for skilled professionals who can effectively utilize cuDNN is on the rise.

Here’s an overview of the exciting career paths and potential employers:

- AI Architects: These professionals design and oversee the implementation of AI solutions, ensuring that they meet organizational goals. Proficiency in cuDNN is crucial for optimizing deep learning models and improving their performance.

- Deep Learning Engineers: Specializing in developing and training neural networks, deep learning engineers benefit greatly from cuDNN’s ability to accelerate computations and enhance model efficiency.

- Data Scientists: By leveraging cuDNN, data scientists can build more sophisticated models to analyze complex datasets, leading to deeper insights and more accurate predictions.

- Machine Learning Researchers: These experts push the boundaries of AI by developing new algorithms and techniques. cuDNN provides the computational power needed to experiment and iterate rapidly.

On that note, if you're looking for a job in artificial intelligence or data science, check out all of our job postings on AI Jobs.

We've got hundreds of open positions from various tech companies all over the globe.

Wrapping Up

cuDNN boosts the efficiency of neural network operations, enabling faster training and improved performance across various applications.

Whether in healthcare, autonomous vehicles, or fraud detection, cuDNN is a key driver behind many modern technological advancements, making it an indispensable tool for developers and researchers alike.