Autoregressive Language Models: What are They & How are They Used?

Have you ever wondered how a machine can predict the next word you're going to type, or even complete your sentences entirely?

This new technology is powered by a type of artificial intelligence known as an autoregressive language model.

At their core, autoregressive language models analyze sequences of words, like text or speech, to predict the most likely word that comes next.

In this blog post, we'll delve into the world of autoregressive language models, exploring how they work and we'll share two of the most widely used models by people and organizations for different tasks and objectives.

What is an Autoregressive Language Model?

An autoregressive language model is a type of artificial intelligence that predicts the next word in a sentence based on the words that came before it.

Popular examples of autoregressive language models include:

- GPT-3

- GPT-4

- Google Gemini/Bard

These models are trained on massive amounts of text data, allowing them to learn the patterns and relationships between words.

To give you an idea of the huge volume of data that these models are trained on, according to various resources, ChatGPT was trained on 570 GB of data. Including texts, books, Wikipedia articles, web texts, and other sources.

This enables them to generate human-like text, translate languages, write different kinds of creative content, and even answer your questions in an informative way.

How can Autoregressive Language Models Predict the Next Word?

So we know that autoregressive language models learn the patterns between words, but how exactly does this work?

Imagine you're playing a word association game.

Someone says "beach," and you blurt out "sand".

An autoregressive language model works in a similar way, but on a much grander scale.

At their core, these models are trained on massive datasets, consisting of vast amounts of text from various sources. Through this training, they learn the intricacies of language: syntax, semantics, and even style.

The process starts with what's known as a prompt—a word, phrase, or sentence input by a user.

From this initial prompt, the model begins its work, predicting the next most likely word based on the context provided thus far.

Each new word generated becomes part of the prompt for the subsequent word prediction. It's a continuous, iterative process, where the model uses the entirety of the text generated up to that point to inform its next choice.

The power of these models lies in their understanding of language patterns and probabilities.

For instance, if the prompt is "The quick brown fox," the model recognizes, based on its training, that "jumps" is a likely next word.

But it doesn't stop at single words.

By analyzing larger patterns and structures, the model can generate complex sentences and detailed paragraphs, creating content that resonates with human readers.

Now that you know what an autoregressive language model is and how they're able to predict the next word in a sentence, let's take a look at some of the most popular autoregressive language models that exist today.

BERT is Not an Autoregressive Language Model

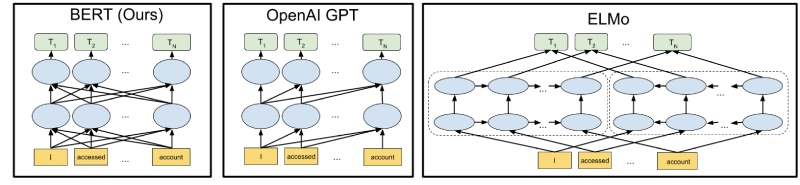

BERT, which stands for Bidirectional Encoder Representations from Transformers, is a ground-breaking model developed by researchers at Google AI in 2018.

One of the key innovations of BERT is its use of a bidirectional approach.

Unlike traditional autoregressive language models that analyze text in a left-to-right manner, BERT considers the entire sequence of words at once, allowing it to capture deeper context and relationships between words.

This unique approach has led to significant advancements in various real-world applications. BERT excels in tasks like:

- Search Engines: By understanding the full context of a query, BERT allows search engines to deliver more relevant results, significantly improving user satisfaction.

- Content Recommendations: Services like news feeds and streaming platforms use BERT to understand user preferences and content nuances, tailoring recommendations that resonate more accurately with individual tastes.

- Language Translation: BERT's deep understanding of language context enhances machine translation services, making cross-language communication more seamless and natural.

- Sentiment Analysis: Businesses leverage BERT to better grasp customer sentiment from reviews and social media, enabling more nuanced customer service strategies.

The Most Popular Autoregressive Language Model: GPT-4

GPT-4, short for Generative Pre-trained Transformer 4, represents the cutting-edge evolution in the series of transformers developed by OpenAI.

Building on the success of its predecessors, GPT-4 pushes the boundaries further in understanding and generating human-like text.

One of the significant strengths of GPT-4 is its ability to handle multimodal inputs.

This means it can not only process text data but also analyze images and other formats to generate even more comprehensive and informative outputs.

Here are some potential real-world applications of GPT-4's capabilities:

- Content Creation: From blog posts to creative stories, GPT-4 assists in generating coherent and engaging content, dramatically reducing the time and effort required in content development.

- Customer Support: Implemented in chatbots and virtual assistants, GPT-4 can understand and respond to customer queries with unprecedented accuracy, improving the customer service experience.

- Language Translation: With its advanced understanding of context, GPT-4 enhances translation services, making communication across languages more fluid and accurate.

- Business Analytics: Analyzing data, compiling reports, and generating insights are streamlined thanks to GPT-4's ability to process and summarize large datasets efficiently.

Are Large Language Models the Same as Autoregressive Language Models?

No, large language models (LLMs) and autoregressive language models are not exactly the same, although there is a significant overlap.

The key difference between the two lies in their method and purpose: while all autoregressive language models are a type of large language models, not all large language models are autoregressive.

Some large language models might employ different techniques, such as bidirectional understanding or non-sequential predictions, to accomplish tasks like text classification, sentiment analysis, or question-answering without necessarily generating new text sequentially.

Large language models encapsulate a broader category that includes various methods of understanding and generating text, with autoregressive being one technique among many.

Think of it like this: Imagine a toolbox full of language processing tools. AR models would be a specific type of wrench within that toolbox, useful for certain tasks. LLMs represent the entire toolbox, containing various tools (including AR models) to handle diverse language processing needs.

Real Life Applications of Autoregressive Language Models

Image Generation and Processing

While you might think of autoregressive language models as purely text-based, they're actually useful for image generation and processing.

Autoregressive language models can analyze text descriptions and translate them into corresponding images.

Imagine providing a sentence like "A majestic lion basking on the golden savanna", and the model generates a picture that matches that description.

The process can also work in reverse.

An AR model can analyze an existing image and generate a descriptive caption. This has applications in automating image labeling for content management or creating captions for visually impaired users.

Natural Language Processing

Natural language processing (NLP) is a field where AR models truly shine. Their ability to analyze and predict word sequences makes them valuable tools for a variety of NLP tasks:

- Machine Translation: AR models can translate languages by analyzing the structure and sequence of words in one language and generating the most likely equivalent sentence in another. This allows for more natural-sounding translations compared to traditional rule-based methods.

- Text Summarization: By understanding the relationships between words and sentences, AR models can condense large chunks of text into concise summaries that capture the key points. This is useful for summarizing news articles, research papers, or any lengthy piece of text.

- Question Answering: AR models can analyze a passage of text and answer your questions about it in a comprehensive way. This is because they can not only identify relevant information but also understand the context and phrasing of your question.

- Chatbots: AR models power many of the chatbots you encounter online or through virtual assistants. They enable chatbots to understand your questions and requests, respond in a natural and informative way, and even hold conversations that mimic human interaction.

- Sentiment Analysis: By analyzing the sequence and tone of words, AR models can determine the sentiment of a piece of text. This is valuable for social media monitoring, customer service applications, and gauging public opinion on various topics.

These are just a few examples, and as AR models continue to evolve, we can expect them to play an even greater role in revolutionizing the way humans and machines interact through natural language.

Time-Series Predictions

One interesting application of AR models is for time-series prediction.

By analyzing historical data sequences, these models can forecast future trends for various phenomena.

Here are some examples of how these models can be used in real life applications for time series predictions:

- Financial Forecasting: AR models can be used to predict stock prices, currency exchange rates, or even customer spending patterns. By analyzing historical financial data, they can identify patterns and trends, allowing investors and businesses to make more informed decisions.

- Demand Forecasting: Businesses can leverage AR models to predict future demand for their products. This helps them optimize inventory management, production scheduling, and marketing campaigns to meet customer needs effectively.

- Sales Forecasting: Similar to demand forecasting, AR models can analyze historical sales data to predict future sales trends. This allows companies to allocate resources efficiently and prepare for potential sales fluctuations.

- Supply Chain Management: By predicting future demand and potential disruptions, AR models can help optimize supply chain logistics. Businesses can ensure they have the right materials in stock at the right time, minimizing delays and production bottlenecks.

- Energy Consumption Prediction: Utility companies can use AR models to predict future energy consumption based on historical usage patterns, weather data, and other relevant factors. This allows them to optimize energy production and distribution, reducing costs and ensuring grid stability.

It's important to remember that time-series predictions are inherently probabilistic. While AR models can identify trends and make informed predictions, unexpected events can always influence the outcome.

However, these models offer a valuable tool for businesses and organizations to make data-driven decisions and navigate an uncertain future.

Limitations of Autoregressive Language Models

While autoregressive language models (AR models) have opened exciting possibilities in AI and language processing, they also have certain limitations:

Shortcoming in Long-Range Dependencies

AR models excel at predicting the next word based on the immediate sequence.

However, they can struggle to capture long-range dependencies within text, where the meaning of a word might depend on words much further back in the sequence.

This can lead to factual inconsistencies or nonsensical outputs when dealing with longer pieces of text.

Exposure Bias

AR models are trained on massive amounts of text data. However, this data may not perfectly reflect real-world language usage.

The model can become biased towards the patterns present in its training data, potentially generating outputs that perpetuate stereotypes or factual inaccuracies.

Limited Reasoning Ability

AR models are not designed for true reasoning or understanding. They can identify patterns and relationships between words, but they cannot grasp the deeper meaning or logic behind the text.

This can lead to outputs that are superficially coherent but lack factual grounding.

Data-Dependence

The effectiveness of AR models heavily relies on the quality and quantity of data they are trained on. Biases or limitations within the training data can be reflected in the model's outputs.

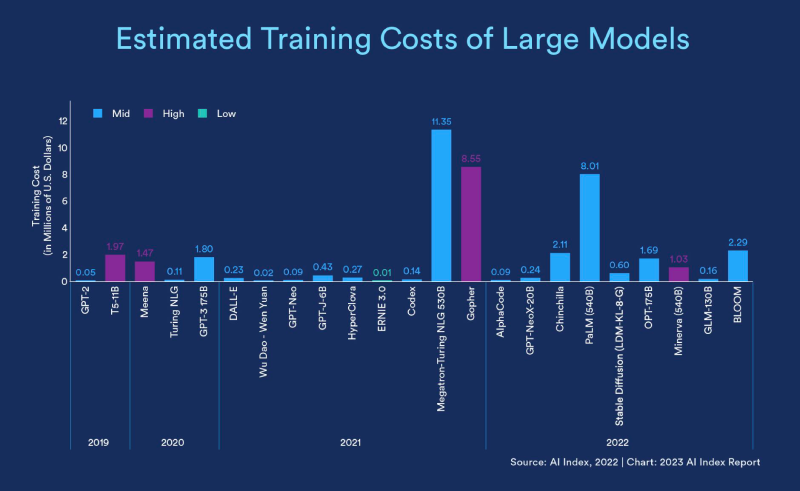

Computational Cost

Training large AR models requires significant computational resources. This can limit their accessibility and scalability for some applications.

Source: LinkedIn

Conclusion

Autoregressive language models are a fascinating branch of AI that can analyze and predict word sequences.

While limitations exist, AR models offer a powerful tool for various applications. As these models continue to evolve, they hold immense potential to revolutionize the way we interact with machines and navigate the vast world of information through the power of language.